|

|

Unbiased Photon Gathering for Light Transport Simulation

Hao Qin, Xin Sun, Qiming Hou, Baining Guo, Kun Zhou

ACM Transactions on Graphics (SIGGRAPH Asia 2015)

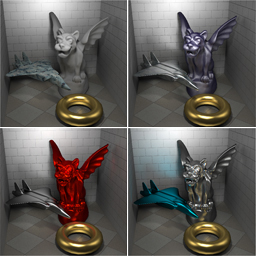

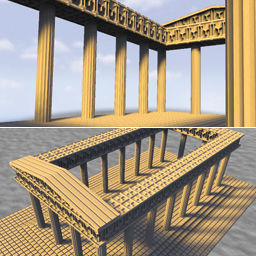

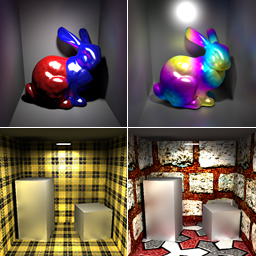

Photon mapping (PM) has been widely regarded as an efficient solution for light transport simulation, including challenging caustics paths and many-bounce indirect lighting. The efficiency of PM comes from reusing traced photons. However, the handling of photon gathering in existing PM algorithms is universally biased – the expected value of their results does not necessarily agree with the true solution of the rendering equation. We present a novel photon gathering method to efficiently achieve unbiased rendering with photon mapping. Instead of aggregating the gathered photons into an estimated density as in classical photon mapping, we process each photon individually and connect the corresponding light sub-path with the eye sub-path that generates the gather point, creating an unbiased path sample. The Monte Carlo estimate for such a path sample is calculated by evaluating all relevant terms in a strict and unbiased way. The end result is a self-contained unbiased sampling technique. We further develop a set of multiple importance sampling (MIS) weights that allow our method to be optimally combined with bidirectional path tracing (BDPT), resulting in an unbiased rendering algorithm that can efficiently handle a wide variety of light paths and that compares favorably with previous algorithms. Experimental results demonstrate the efficacy and robustness of our method.

[paper] [supplementary material] [bibtex] [pptx]

|

|

|

Hierarchical Diffusion Curves for Accurate Automatic Image Vectorization

Guofu Xie, Xin Sun, Xin Tong, Derek Nowrouzezahrai

ACM Transactions on Graphics (SIGGRAPH Asia 2014)

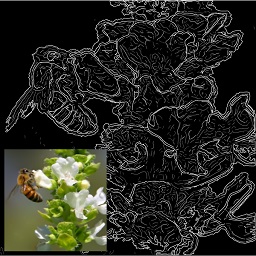

Diffusion curve primitives are a compact and powerful representation for vector images. While several vector image authoring tools leverage these representations, automatically and accurately vectorizing arbitrary raster images using diffusion curves remains a difficult problem. We automatically generate sparse diffusion curve vectorizations of raster images by fitting curves in the Laplacian domain. Our approach is fast, combines Laplacian and bilaplacian diffusion curve representations, and generates a hierarchical representation that accurately reconstructs both vector art and natural images. The key idea of our method is to trace curves in the Laplacian domain, which captures both sharp and smooth image features, across scales, more robustly than previous image- and gradientdomain fitting strategies. The sparse set of curves generated by our method accurately reconstructs images and often closely matches tediously hand-authored curve data. Also, our hierarchical curves are readily usable in all existing editing frameworks. We validate our method on a broad class of images, including natural images, synthesized images with turbulent multi-scale details, and traditional vector-art, as well as illustrating simple multi-scale abstraction and color editing results.

[paper] [supplementary material] [bibtex] [pptx]

|

|

|

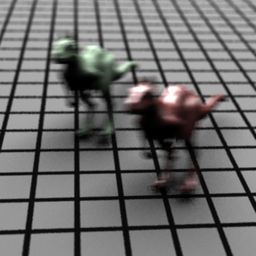

T-ReX: Interactive Global Illumination of Massive Models on Heterogeneous Computing Resources

Tae-Joon Kim, Xin Sun, Sung-Eui Yoon

IEEE Transactions on Visualization and Computer Graphics, 2014

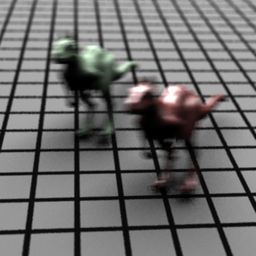

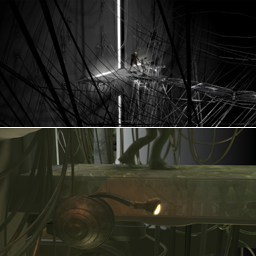

We propose interactive global illumination techniques for a diverse set of massive models. We integrate these techniques within a progressive rendering framework that aims to achieve both a high rendering throughput and interactive responsiveness. In order to achieve a high rendering throughput we utilize heterogeneous computing resources of CPU and GPU. To reduce expensive data transmission costs between CPU and GPU, we propose to use separate, decoupled data representations dedicated for each CPU and GPU. Our representations consist of geometric and volumetric parts, and provide different levels of resolutions and support progressive global illumination for massive models. We also propose a novel, augmented volumetric representation that provides additional geometric resolutions within our volumetric representation. In addition we employ tile-based rendering and propose a tile ordering technique considering visual perception. We have tested our approach with a diverse set of large-scale models including CAD, scanned, simulation models that consist of more than 300 million triangles. By using our methods, we are able to achieve ray processing performance of 3 M~20 M rays per second, while limiting response time to users within 15 ms~67 ms. We also allow dynamic modifications on light, and material setting interactively, while efficiently supporting novel view rendering.

[paper] [bibtex] [pptx] [project page]

|

|

|

Line Segment Sampling with Blue-Noise Properties

Xin Sun, Kun Zhou, Jie Guo, Guofu Xie, Jingui Pan, Wencheng Wang, Baining Guo

ACM Transactions on Graphics (SIGGRAPH 2013)

Line segment sampling has recently been adopted in many rendering algorithms for better handling of a wide range of effects such as motion blur, defocus blur and scattering media. A question naturally raised is how to generate line segment samples with good properties that can effectively reduce variance and aliasing artifacts observed in the rendering results. This paper studies this problem and presents a frequency analysis of line segment sampling. The analysis shows that the frequency content of a line segment sample is equivalent to the weighted frequency content of a point sample. The weight introduces anisotropy that smoothly changes among point samples, line segment samples and line samples according to the lengths of the samples. Line segment sampling thus makes it possible to achieve a balance between noise (point sampling) and aliasing (line sampling) under the same sampling rate. Based on the analysis, we propose a line segment sampling scheme to preserve blue-noise properties of samples which can significantly reduce noise and aliasing artifacts in reconstruction results. We demonstrate that our sampling scheme improves the quality of depth-of-field rendering, motion blur rendering, and temporal light field reconstruction.

[paper] [supplementary material] [sequences] [bibtex] [pptx]

|

|

|

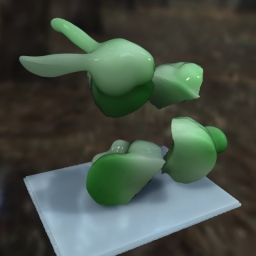

TransCut: Interactive Rendering of Translucent Cutouts

Dongping Li, Xin Sun, Zhong Ren, Stephen Lin, Yiying Tong, Baining Guo, Kun Zhou

IEEE Transactions on Visualization and Computer Graphics, 2013

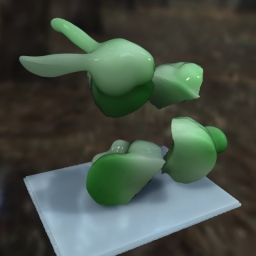

We present TransCut, a technique for interactive rendering of translucent objects undergoing fracturing and cutting operations. As the object is fractured or cut open, the user can directly examine and intuitively understand the complex translucent interior, as well as edit material properties through painting on cross sections and recombining the broken pieces - all with immediate and realistic visual feedback. This new mode of interaction with translucent volumes is made possible with two technical contributions. The first is a novel solver for the diffusion equation (DE) over a tetrahedral mesh that produces high-quality results comparable to the state-of-art finite element method of Arbree et al. [1] but at substantially higher speeds. This accuracy and efficiency is obtained by computing the discrete divergences of the diffusion equation and constructing the DE matrix using analytic formulas derived for linear finite elements. The second contribution is a multi-resolution algorithm to significantly accelerate our DE solver while adapting to the frequent changes in topological structure of dynamic objects. The entire multi-resolution DE solver is highly parallel and easily implemented on the GPU. We believe TransCut provides a novel visual effect for heterogeneous translucent objects undergoing fracturing and cutting operations.

[paper] [mov] [mov(kinect)] [bibtex]

|

|

|

Interactive Depth-of-Field Rendering with Secondary Rays

Guofu Xie, Xin Sun, Wencheng Wang

Journal of Computer Science and Technology, 2013

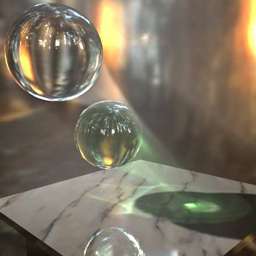

This paper presents an efficient method to trace secondary rays in depth-of-field (DOF) rendering, which significantly enhances realism. Till now, the effects by secondary rays have been little addressed in realtime/interactive DOF rendering, because secondary rays have less coherence than primary rays, making them very difficult to handle. We propose novel measures to cluster secondary rays, and take a virtual viewpoint to construct a layered image-based representation for the objects that would be intersected by a cluster of secondary rays respectively. Therefore, we can exploit coherence of secondary rays in the clusters to speed up tracing secondary rays in DOF rendering. Results show that we can interactively achieve DOF rendering effects with reflections or refractions on a commodity graphics card.

[paper] [avi] [bibtex]

|

|

|

Diffusion Curve Textures for Resolution Independent Texture Mapping

Xin Sun, Guofu Xie, Yue Dong, Stephen Lin, Weiwei Xu, Wencheng Wang, Xin Tong, Baining Guo

ACM Transactions on Graphics (SIGGRAPH 2012)

We introduce a vector representation called diffusion curve tex-tures for mapping diffusion curve images (DCI) onto arbitrary surfaces. In contrast to the original implicit representation of DCIs [Orzan et al. 2008], where determining a single texture value requires iterative computation of the entire DCI via the Poisson equation, diffusion curve textures provide an explicit representa-tion from which the texture value at any point can be solved di-rectly, while preserving the compactness and resolution indepen-dence of diffusion curves. This is achieved through a formulation of the DCI diffusion process in terms of Green’s functions. This formulation furthermore allows the texture value of any rectangular region (e.g. pixel area) to be solved in closed form, which facilitates anti-aliasing. We develop a GPU algorithm that renders anti-aliased diffusion curve textures in real time, and demonstrate the effective-ness of this method through high quality renderings with detailed control curves and color variations.

[paper] [divx] [bibtex] [pptx]

|

|

|

Memory-Scalable GPU Spatial Hierarchy Construction

Qiming Hou, Xin Sun, Kun Zhou, Christian Lauterbach, Dinesh Manocha

IEEE Transactions on Visualization and Computer Graphics, 2011

Recent GPU algorithms for constructing spatial hierarchies have achieved promising performance for moderately complex models by using the BFS (breadth-first search) construction order. While being able to exploit the massive parallelism on the GPU, the BFS order also consumes excessive GPU memory, which becomes a serious issue for interactive applications involving very complex models with more than a few million triangles. In this paper, we propose to use the PBFS (partial breadth-first search) construction order to control memory consumption while maximizing performance. We apply the PBFS order to two hierarchy construction algorithms. The first algorithm is for kd-trees that automatically balances between the level of parallelism and intermediate memory usage. With PBFS, peak memory consumption during construction can be efficiently controlled without costly CPU-GPU data transfer. We also develop memory allocation strategies to effectively limit memory fragmentation. The resulting algorithm scales well with GPU memory and constructs kd-trees of models with millions of triangles at interactive rates on GPUs with 1GB memory. Compared with existing algorithms, our algorithm is an order of magnitude more scalable for a given GPU memory bound. The second algorithm is for out-of-core BVH (bounding volume hierarchy) construction for very large scenes based on the PBFS construction order. At each iteration, all constructed nodes are dumped to the CPU memory, and the GPU memory is freed for the next iteration's use. In this way, the algorithm is able to build trees that are too large to be stored in the GPU memory. Experiments show that our algorithm can construct BVHs for scenes with up to 20M triangles, several times larger than previous GPU algorithms.

[paper] [mov] [bibtex]

|

|

|

Radiance Transfer Biclustering for Real-time All-frequency Bi-scale Rendering

Xin Sun, Qiming Hou, Zhong Ren, Kun Zhou, Baining Guo

IEEE Transactions on Visualization and Computer Graphics, 2011

We present a real-time algorithm to render all-frequency radiance transfer at both macro-scale and meso-scale. At a meso-scale, the shading is computed on a per-pixel basis by integrating the product of the local incident radiance and a bidirectional texture function. While at a macro-scale, the precomputed transfer matrix, which transfers the global incident radiance to the local incident radiance at each vertex, is losslessly compressed by a novel biclustering technique. The biclustering is directly applied on the radiance transfer represented in a pixel basis, on which the BTF is naturally defined. It exploits the coherence in the transfer matrix and a property of matrix element values to reduce both storage and runtime computation cost. Our new algorithm renders at realtime frame rates realistic materials and shadows under all-frequency direct environment lighting. Comparisons show that our algorithm is able to generate images that compare favorably with reference ray tracing results, and has obvious advantages over alternative methods in storage and preprocessing time.

[paper] [mov] [bibtex]

|

|

|

Line Space Gathering for Single Scattering in Large Scenes

Xin Sun, Kun Zhou, Stephen Lin, Baining Guo

ACM Transactions on Graphics (SIGGRAPH 2010)

We present an efficient technique to render single scattering in large scenes with reflective and refractive objects and homogeneous participating media. Efficiency is obtained by evaluating the final radiance along a viewing ray directly from the lighting rays passing near to it, and by rapidly identifying such lighting rays in the scene. To facilitate the search for nearby lighting rays, we convert lighting rays and viewing rays into 6D points and planes according to their Plücker coordinates and coefficients, respectively. In this 6D line space, the problem of closest lines search becomes one of closest points to a plane query, which we significantly accelerate using a spatial hierarchy of the 6D points. This approach to lighting ray gathering supports complex light paths with multiple reflections and refractions, and avoids the use of a volume representation, which is expensive for large-scale scenes. This method also utilizes far fewer lighting rays than the number of photons needed in traditional volumetric photon mapping, and does not discretize viewing rays into numerous steps for ray marching. With this approach, results similar to volumetric photon mapping are obtained efficiently in terms of both storage and computation.

[paper] [divx] [bibtex] [ppt & pptx] [scenes]

|

|

|

RenderAnts: Interactive Reyes Rendering on GPUs

Kun Zhou, Qiming Hou, Zhong Ren, Minmin Gong, Xin Sun, Baining Guo

ACM Transactions on Graphics (SIGGRAPH Asia 2009)

We present RenderAnts, the first system that enables interactive Reyes rendering on GPUs. Taking RenderMan scenes and shaders as input, our system first compiles RenderMan shaders to GPU shaders. Then all stages of the basic Reyes pipeline, including bounding/splitting, dicing, shading, sampling, compositing and filtering, are executed on GPUs using carefully designed data-parallel algorithms. Advanced effects such as shadows, motion blur and depth-of-field can also be rendered. In order to avoid exhausting GPU memory, we introduce a novel dynamic scheduling algorithm to bound the memory consumption during rendering. The algorithm automatically adjusts the amount of data being processed in parallel at each stage so that all data can be maintained in the available GPU memory. This allows our system to maximize the parallelism in all individual stages of the pipeline and achieve superior performance. We also propose a multi-GPU scheduling technique based on work stealing so that the system can support scalable rendering on multiple GPUs. The scheduler is designed to minimize inter-GPU communication and balance workloads among GPUs.

We demonstrate the potential of RenderAnts using several complex RenderMan scenes and an open source movie entitled Elephants Dream. Compared to Pixar's PRMan, our system can generate images of comparably high quality, but is over one order of magnitude faster. For moderately complex scenes, the system allows the user to change the viewpoint, lights and materials while producing photorealistic results at interactive speed.

[paper] [wmv] [bibtex]

|

|

|

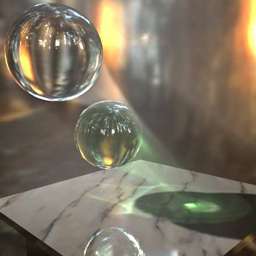

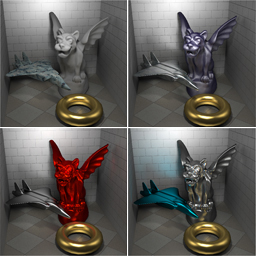

Interactive Relighting of Dynamic Refractive Objects

Xin Sun, Kun Zhou, Eric Stollnitz, Jiaoying Shi, Baining Guo

ACM Transactions on Graphics (SIGGRAPH 2008)

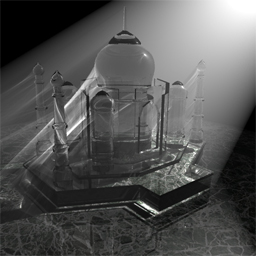

We present a new technique for interactive relighting of dynamic refractive objects with complex material properties. We describe our technique in terms of a rendering pipeline in which each stage runs entirely on the GPU. The rendering pipeline converts surfaces to volumetric data, traces the curved paths of photons as they refract through the volume, and renders arbitrary views of the resulting radiance distribution. Our rendering pipeline is fast enough to permit interactive updates to lighting, materials, geometry, and viewing parameters without any precomputation. Applications of our technique include the visualization of caustics, absorption, and scattering while running physical simulations or while manipulating surfaces in real time.

[paper] [divx] [bibtex] [ppt]

|

|

|

Interactive Relighting with Dynamic BRDFs

Xin Sun, Kun Zhou, Yanyun Chen, Stephen Lin, Jiaoying Shi, Baining Guo

ACM Transactions on Graphics (SIGGRAPH 2007)

We present a technique for interactive relighting in which source radiance, viewing direction, and BRDFs can all be changed on the fly. In handling dynamic BRDFs, our method efficiently accounts for the effects of BRDF modification on the reflectance and incident radiance at a surface point. For reflectance, we develop a BRDF tensor representation that can be factorized into adjustable terms for lighting, viewing, and BRDF parameters. For incident radiance, there exists a non-linear relationship between indirect lighting and BRDFs in a scene, which makes linear light transport frameworks such as PRT unsuitable. To overcome this problem, we introduce precomputed transfer tensors (PTTs) which decompose indirect lighting into precomputable components that are each a function of BRDFs in the scene, and can be rapidly combined at run time to correctly determine incident radiance. We additionally describe a method for efficient handling of high-frequency specular reflections by separating them from the BRDF tensor representation and processing them using precomputed visibility information. With relighting based on PTTs, interactive performance with indirect lighting is demonstrated in applications to BRDF animation and material tuning.

[paper] [divx] [wmv] [bibtex] [ppt]

|

|

|

Interactive Global Illumination Rendering with Spatial-Variant Dynamic Materials (Chinese)

Xin Sun, Kun Zhou, Jiaoying Shi

Chinese Journal of Software, July, 2008

We propose a method of interactive global illumination rendering with spatial-variant dynamic materials under complex illumination. With the spatial-variant dynamic materials, the materials of the scene can be changed while rendering, and the changes to different parts of an object can be different. The non-linear relationship between materials and out-going radiance prevents users from changing the materials with most of existing interactive global illumination rendering algorithms. If the different parts of an object are covered with different materials, the materials take much more complex effects on the out-going radiance. So there is still not a interactive global illumination rendering algorithm allow users to make different changes to different parts of an object. In this paper, we approximate a region with spatial-variant dynamic materials by dividing it into numbers of sub-regions, the material in each sub-region is uniform and consistent. The radiance transferred in the scene may be reflected by different sub-regions successively, and we divide the out-going radiance according to the different sequences of reflection sub-regions. We represent all materials with a linear basis, and applied the basis to all sub-regions to get all different distributions of the material basis. We precompute all parts of radiance of all material basis distributions. In rendering process, we use the materials' coefficients of basis to combine corresponding precomputed data to achieve the global illumination effects with interactive performance.

[webpage] [paper] [bibtex]

|

|

|

Real-Time Global Illumination Rendering with Dynamic Materials (Chinese)

Xin Sun, Kun Zhou, Jiaoying Shi

Chinese Journal of Software, April, 2008

All previous algorithms of real-time global illumination rendering based on precomputation assume that the materials are invariant, which makes the transfer from lighting to outgoing radiance a linear transformation. By precomputing the linear transformation, real-time global illumination rendering can be achieved under dynamic lighting. This linearity does not hold when materials change. So far, there are no algorithms applicable to scenes with dynamic materials. This paper introduces a real-time rendering method for scenes with editable materials under direct and indirect illumination. The outgoing radiance is separated into different parts based on the times and corresponding materials of reflections. Each part of radiance is linear dependent on the product of the materials along its path of reflections. So the nonlinear problem is converted to a linear one. All materials available are represented as linear combinations of a basis. The basis is applied to the scene to get numbers of different material distributions. For each material distribution, all parts of radiance are precomputed. This paper simply linear combines the precomputed data by coefficients of materials on basis to achieve the effects of global illumination in real-time. This method is applied to scenes with fixed geometry, fixed lighting and fixed view direction. And the materials are represented with bidirectional reflectance distribution functions (BRDFs), which do not take refraction or translucency into account. The authors can simulate in real-time frame rates up to two bounces of light in the implementation, and some interesting phenomena of global illumination, such as color bleeding and caustics, can be achieved.

[webpage] [paper] [wmv] [bibtex]

|

Ph.D. Thesis